In a previous post, I discussed solving intermittent issues aka building more robust automated tests. A solution I did not mention is the simple “just give it another chance”. When you have big and long suites of automated tests (quite classic to have suites in the 1000’s and lasting hours when doing functional tests), then you might get a couple of tests randomly failing for unknown reasons. Why not just launching only those failed tests again? If they fail once more, you are hitting a real problem. If they succeed, you might have hit an intermittent problem and you might decide to just ignore it.

Re-executing failed tests (–rerunfailed) appeared in Robot Framework 2.8. And since version 2.8.4 a new option (–merge) was added to rebot to merge output from different runs. Like explained in the User Guide, those 2 options make a lot of sense when used together:

# first execute all tests pybot --output original.xml tests # then re-execute failing pybot --rerunfailed original.xml --output rerun.xml tests # finally merge results rebot --merge original.xml rerun.xml

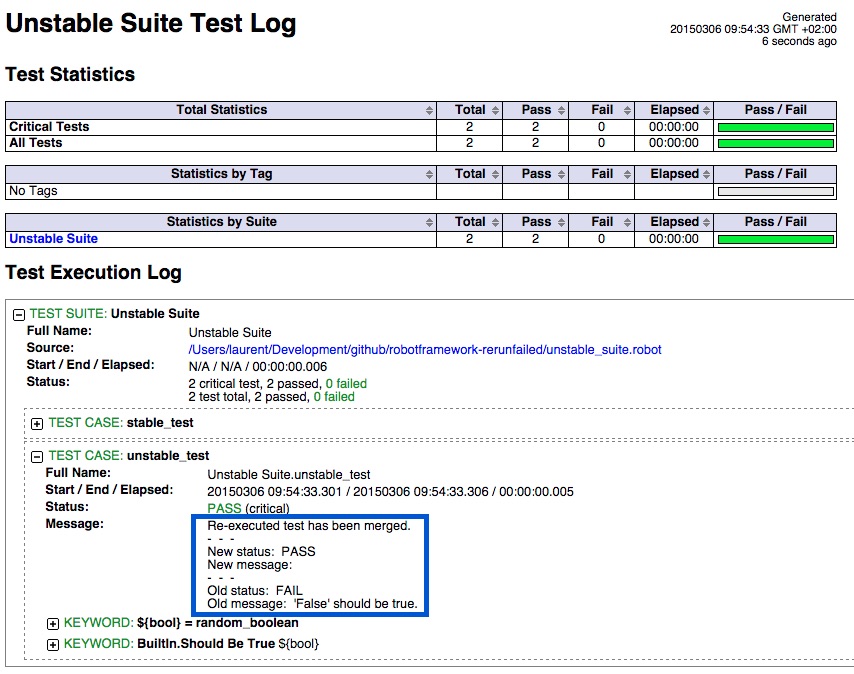

This will produce a single report where the second execution of the failed test is replacing the first execution. So every test appears once and for those executed twice, we see the first and second execution message:

Here, I propose to go a little bit further and show how to use –rerunfailed and –merge while:

- writing output files in an “output” folder instead of the execution one (use of –outputdir). This is quite a common practice to have the output files written in a custom folder but it makes the whole pybot call syntax a bit more complex.

- giving access to log files from first and second executions via links displayed in the report (use of Metadata). Sometimes having the “new status” and “old status” (like in previous screenshot) is not enough and we want to have details on what went wrong in the execution, and having only the merged report is not enough.

To show this let’s use a simple unstable test:

*** Settings ***

Library String

*** Test Cases ***

stable_test

should be true ${True}

unstable_test

${bool} = random_boolean

should be true ${bool}

*** Keywords ***

random_boolean

${nb_string} = generate random string 1 [NUMBERS]

${nb_int} = convert to integer ${nb_string}

Run keyword and return evaluate (${nb_int} % 2) == 0

The unstable_test will fail 50% of times and the stable test will always succeed.

And so, here is the script I propose to launch the suite:

# clean previous output files rm -f output/output.xml rm -f output/rerun.xml rm -f output/first_run_log.html rm -f output/second_run_log.html echo echo "#######################################" echo "# Running portfolio a first time #" echo "#######################################" echo pybot --outputdir output $@ # we stop the script here if all the tests were OK if [ $? -eq 0 ]; then echo "we don't run the tests again as everything was OK on first try" exit 0 fi # otherwise we go for another round with the failing tests # we keep a copy of the first log file cp output/log.html output/first_run_log.html # we launch the tests that failed echo echo "#######################################" echo "# Running again the tests that failed #" echo "#######################################" echo pybot --outputdir output --nostatusrc --rerunfailed output/output.xml --output rerun.xml $@ # Robot Framework generates file rerun.xml # we keep a copy of the second log file cp output/log.html output/second_run_log.html # Merging output files echo echo "########################" echo "# Merging output files #" echo "########################" echo rebot --nostatusrc --outputdir output --output output.xml --merge output/output.xml output/rerun.xml # Robot Framework generates a new output.xml |

and here is an example of execution (case where unstable test fails once and then succeeds):

$ ./launch_test_and_rerun.sh unstable_suite.robot ####################################### # Running portfolio a first time # ####################################### ========================================================== Unstable Suite ========================================================== stable_test | PASS | ---------------------------------------------------------- unstable_test | FAIL | 'False' should be true. ---------------------------------------------------------- Unstable Suite | FAIL | 2 critical tests, 1 passed, 1 failed 2 tests total, 1 passed, 1 failed ========================================================== Output: path/to/output/output.xml Log: path/to/output/log.html Report: path/to/output/report.html ####################################### # Running again the tests that failed # ####################################### ========================================================== Unstable Suite ========================================================== unstable_test | PASS | ---------------------------------------------------------- Unstable Suite | PASS | 1 critical test, 1 passed, 0 failed 1 test total, 1 passed, 0 failed ========================================================== Output: path/to/output/rerun.xml Log: path/to/output/log.html Report: path/to/output/report.html ######################## # Merging output files # ######################## Output: path/to/output/output.xml Log: path/to/output/log.html Report: path/to/output/report.html

So, the first part is done: we have a script that launch the suite twice if needed and put all the output in “output” folder. Now let’s update the “settings” section of our test to include links to first and second run logs:

*** Settings *** Library String Metadata Log of First Run [first_run_log.html|first_run_log.html] Metadata Log of Second Run [second_run_log.html|second_run_log.html]

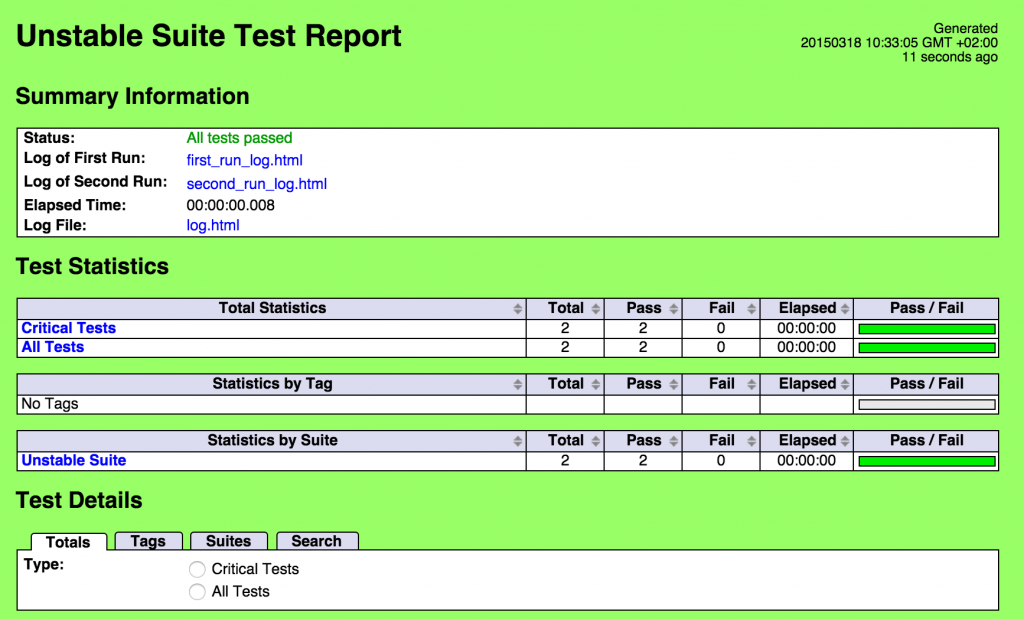

If we launch our script again, we will get a report with links to first and second run in the “summary information” section:

The script and the test can be found in a GitHub repository. Feel free to comment on that topic if you found out more tips on those Robot options.

This triggered me to rewrite my test running scripts(from .bat to grunt as it’s crossplatform), including test rerunning. Suddenly we have way more stable tests. Thanks for this post.

:: clean previous output files

@echo off

rd /s /q Output

echo #######################################

echo # Running tests first time #

echo #######################################

cmd /c pybot –outputdir Output %*

:: we stop the script here if all the tests were OK

if errorlevel 1 goto DGTFO

echo #######################################

echo # Tests passed, no rerun #

echo #######################################

exit /b

:: otherwise we go for another round with the failing tests

:DGTFO

:: we keep a copy of the first log file

copy Output\log.html Output\first_run_log.html /Y

:: we launch the tests that failed

echo #######################################

echo # Running again the tests that failed #

echo #######################################

cmd /c pybot –outputdir Output –nostatusrc –rerunfailed Output/output.xml –output rerun.xml %*

:: Robot Framework generates file rerun.xml

:: we keep a copy of the second log file

copy Output\log.html Output\second_run_log.html /Y

:: Merging output files

echo ########################

echo # Merging output files #

echo ########################

cmd /c rebot –nostatusrc –outputdir Output –output output.xml –merge Output/output.xml Output/rerun.xml

:: Robot Framework generates a new output.xml

Here you go, a .bat file to be executed from windows.

Thanks for the contribution!

::Executable

:: clean previous output files

@echo off

rd /s /q Output

echo #######################################

echo # Running tests first time #

echo #######################################

cmd /c pybot –outputdir Output %*

:: we stop the script here if all the tests were OK

if errorlevel 1 goto DGTFO

echo #######################################

echo # Tests passed, no rerun #

echo #######################################

exit /b

:: otherwise we go for another round with the failing tests

:DGTFO

:: we keep a copy of the first log file

copy Output\log.html Output\first_run_log.html /Y

:: we launch the tests that failed

echo #######################################

echo # Running again the tests that failed #

echo #######################################

cmd /c pybot –nostatusrc –outputdir Output –rerunfailed Output/output.xml –output rerun.xml %*

:: Robot Framework generates file rerun.xml

:: we keep a copy of the second log file

copy Output\log.html Output\second_run_log.html /Y

:: Merging output files

echo ########################

echo # Merging output files #

echo ########################

cmd /c rebot –nostatusrc –outputdir Output –output output.xml –merge Output/output.xml Output/rerun.xml

:: Robot Framework generates a new output.xml

Thanks a lot!

Can this be extended to work with Robot tags too ?

sure, you can use include/exclude when you run/rerun tests and use –noncritical as well on pybot/rebot.

I am using Rebot to merge all output.xml created in batches.On trying to use –rerunfailed on the output.xml after Rebot ,Robot complains on not being able to find my test case name.What could the reason be?Something like that-

{/code

[ ERROR ] Suite ‘Tests’ contains no tests named ‘Tests.Benefit Information Content SLT.Benefit Information Learn More Attribute Validation and Close Enrollment’.code/}

Meanwhile,I am trying the workaround to run –rerunfailed after pybot instead of Rebot but that also means a lot of duplicate code.

Any suggestions will be appreciated.

I don’t see any obvious explanation for this.

You might try to ask the question on the mailing list: https://groups.google.com/forum/#!forum/robotframework-users

Never mind.I ended up creating a function to check the subprocess returncode and then execute rerunfailed if the returncode was not 0.

Thanks for your help !Kudos to great blog and prompt reply.

Any thoughts about re-running a test suite where there is a failure within? It has been my experience (and indeed, the environment I am in now) where having the luxury of mostly or only stand-alone tests just isn’t a possibility. Most of my automated testing tends to be larger functional/end-to-end tests where you can’t just test things atomically.

For example, I have a “suite of suites” that comprise our smoke tests. They are all a multi-page walkthrough of a user application. You can’t test “page 5” without testing all previous pages first. However, there may be various things that cause that particular page’s test fail, and I’d like to re-run the whole suite again – not just that individual test case.

Anyway – thanks for your post regardless. I’ll see if I can come up with a reasonable solution on my end and will post if I come up with something worthwhile.

First, if what you want is to relaunch your whole smoke test suite when there is at least a failure, then you can do a shell/batch script that is a simpler version of the one I put in the post. Basically, 1) run the test first time 2) exit if no error 3) launch the test a second time (no need for –rerunfailed and merge since you want to relaunch everything)

Then, that’s really a mess if you have no finer granularity than rerunning the whole thing each time… Did you try to have nested levels of setup/teardown that would put you in a position to run each sub-suite independently?

Hi,.

Even i am facing the same issue. I want to rerun the while teat suite in case even a single test case fails in the test suite.

Can you please provide the script to achieve this

I run my robot tests via Jenkins.

Is there any way I can re-run the test only if there is a failure?

If i include re-run after the pybot command, then the Jenkins job fails if there was no failure.

that’s included in the script I am sharing.

Look at that statement:

if [ $? -eq 0 ]; then

echo “we don’t run the tests again as everything was OK on first try”

exit 0

fi

Hello,

i am using robot plugin with eclipse to write test suites, and using ant lib to execute them through command line,

I tried all ways of re running the failed cases again, but i didn’t get success, i tried this way also but still i got stucked.

I am new in automation, so can you please share me some test project for this,so i can try according to that.

Thanks

I don’t have more to share than the script shown here (and I don’t use Eclipse or Ant). What kind of error do you get?

This works fine if you’re just running the raw Execute Windows batch command alone from Jenkins without RobotFramework Post-build Actions Publish Robot Framework test results. See if you use these two together the end result for example if a test fails first time and then goes into rerun then we merge up the output files will result in a Published Robot Framework fail even though the test has passed the second time around. This solution will work well with the Build step Execute Windows batch command alone but when I try to add Post-build Action it will fail since the Robot plug-in for Jenkins will read the output.xml fail and then results will show Fail. I’m sure there is some work around but for now I don’t know and need to figure this part out.

What argument do you use in the rebot call?

I am using this:

rebot –nostatusrc –outputdir output –output output.xml –merge output/output.xml output/rerun.xml

and this will produce a new output.xml that contains a merge of both execution and if the test succeeded in the second execution, then it is considered OK.

Hi Lauren,

The problem is the RobotFramework plugin for Jenkins only parses the output.xml looking for any fails and if it finds fails then regardless of rerun and rebot etc.. it will produce an overall failed status for the build run.

Also even if I run without the RobotFramework plugin and just jenkins with a Windows Exec Batch Step it will produce a fail as well even after the rerun because it reads a fail when it parses through the output.xml that gets created from RobotFramework.

The only place I’ve seen this working successfully is with cmd alone. I get the whole idea of the second execution is then considered OK and Passed but Jenkins will show a fail because it’s only parsing the output.xml which contains a fail from the first run.

if you use rebot the way I describe it in the article, it should contain a merge of both runs and if the second run (failed tests) was ok (test succeeded) then the final output.xml will be OK. I am using it on a daily basis with Jenkins Plugin and it works OK. Maybe give more details about the steps you are doing so that we can help you understand what is going on.

Here’s what I get in Console:

########################

# Merging output files #

########################

[ ERROR ] option -û not recognized

Try –help for usage information.

Build step ‘Execute Windows batch command’ marked build as failure

Robot results publisher started…

-Parsing output xml:

Done!

-Copying log files to build dir:

Done!

-Assigning results to build:

Done!

-Checking thresholds:

Done!

Done publishing Robot results.

Finished: FAILURE

Here’s what I have in the Build Execute Windows batch command

:: clean / delete previous output files

:: If needed to delete folder alone provide ‘rd /s /q SOFTWARE’ without the quotes instead of del

@echo off

::cd C:\Users\gfung\.jenkins\workspace\COMP-Smoketest-Admin-Side\COMP\TestSuites\robotframework-rerunfailed-master\

::cd C:\Users\gfung\.jenkins\workspace\SMP-API-Talent_Pool\COMP\TestSuites\robotframework-rerunfailed-master\

cd C:\Users\gfung\.jenkins\workspace\RERUN\

::cd C:\Users\gfung\.jenkins\workspace\SMP-API-Talent_Pool\COMP\TestSuites\

rd /s /q OG_TEST_RESULTS

::del output.xml

echo #######################################

echo # Running tests first time #

echo #######################################

::pybot –outputdir OG_TEST_RESULTS unstable_suite.robot

::cmd /c pybot –outputdir OG_TEST_RESULTS ReRunTest.robot

cmd /c pybot –outputdir OG_TEST_RESULTS ReRunTest.robot

::cmd /c pybot –outputdir C:\Users\gfung\.jenkins\workspace\COMP\TestSuites\ %*

:: we stop the script here if all the tests were OK

:: if errorlevel 1 echo # FAILED Going to Rerun now! #

if errorlevel 1 goto DGTFO

echo #######################################

echo # Tests passed, no rerun #

echo #######################################

exit /b

:: otherwise we go for another round with the failing tests

:DGTFO

:: we keep a copy of the first log file to first_run_log.html

copy OG_TEST_RESULTS\log.html OG_TEST_RESULTS\first_run_log.html /Y

:: we launch the tests that failed

echo #######################################

echo # Running again the tests that failed #

echo #######################################

cmd /c pybot –outputdir OG_TEST_RESULTS –nostatusrc –rerunfailed OG_TEST_RESULTS/output.xml –output rerun.xml ReRunTest.robot

::–output.xml rerun.xml %*

:: Robot Framework generates file rerun.xml

:: we keep a copy of the second log file

copy OG_TEST_RESULTS\log.html OG_TEST_RESULTS\second_run_log.html /Y

:: Merging output files

echo ########################

echo # Merging output files #

echo ########################

cmd /c rebot -–nostatusrc -–outputdir OG_TEST_RESULTS –output output.xml –merge OG_TEST_RESULTS/output.xml OG_TEST_RESULTS/rerun.xml

:: Robot Framework generates a new

I’m using windows machine set up.

Thank you laurentbristiel!!

I finally got this code working on both operating systems Windows Server 2012 R2 and OS X Yosemite Version 10.10.

All I needed to do is to provide an Execute Shell in Jenkins on my OS X Yosemite with

cd /Users/Admin/Automation/TestSuites/

./launch_test_and_rerun.sh Re-Run-Test.robot

And on Windows Server 2012 R2

All I needed to do is to provide an Execute Windows batch command

cd C:\Users\gfung\Desktop\

CI_RErun.bat ReRunTest.robot

With both I just added a Post-build Action with Publish Robot Framework test results and I also added a E-mail Notification and that’s it!!

I am observing that if the failed tests never run on Jenkins.

If pybot fails some tests the reports are generated directly.

It directly generates reports without checking condition if the tests exited with error code other than 0 and thus doesn’t rerun failed tests

Please note that I am executing the tests on window machine.

Command:

del Reports\*.* /s /q

pybot -t %testCase% -d Reports -o output.xml Test_Suites

IF NOT ERRORLEVEL 0 ( pybot –nostatusrc -d Reports –rerunfailed Reports\output.xml -o rerun1.xml C:\Automation\Web\Test_Suites

rebot -d Reports –merge Reports\output.xml Reports\rerun1.xml

)

Even if there was a failure, the If condition never got executed and reports were generated.

Hi Amol,

I’m using this script on windows machine and it works fine. From Jenkins configurations I have a Execute Windows batch command that fetches the batch script and runs everything fine with Robot plugin for Jenkins.

This is the Execute Windows batch command below from Jenkins Build Environment Configurations :

cd C:\Users\gfung\Desktop\

SMP_API_TESTSUITE_QA052.bat SMP-API-Talent_Pool.robot

This is my bat file below:

::C:\Users\gfung\.jenkins\workspace\SMP-API-Talent_Pool

@echo off

::cd C:\Users\gfung\.jenkins\workspace\COMP-Smoketest-Admin-Side\COMP\TestSuites\robotframework-rerunfailed-master\

::cd C:\Users\gfung\.jenkins\workspace\SMP-API-Talent_Pool\COMP\TestSuites\robotframework-rerunfailed-master\

cd C:\Users\gfung\.jenkins\workspace\SMP-API-Talent_Pool\TestSuites\

::cd C:\Users\gfung\.jenkins\workspace\SMP-API-Talent_Pool\COMP\TestSuites\

rd /s /q OG_TEST_RESULTS

::del output.xml

echo #######################################

echo # Running tests first time #

echo #######################################

::pybot –outputdir OG_TEST_RESULTS unstable_suite.robot

::cmd /c pybot –outputdir OG_TEST_RESULTS ReRunTest.robot

cmd /c pybot –outputdir OG_TEST_RESULTS -v RNOAUTH_HOST:QA01 –listener TestRailListener:479 SMP-API-Talent_Pool.robot

::cmd /c pybot –outputdir C:\Users\gfung\.jenkins\workspace\COMP\TestSuites\ %*

:: we stop the script here if all the tests were OK

:: if errorlevel 1 echo # FAILED Going to Rerun now! #

if errorlevel 1 goto DGTFO

echo #######################################

echo # Tests passed, no rerun #

echo #######################################

exit /b

:: otherwise we go for another round with the failing tests

:DGTFO

:: we keep a copy of the first log file to first_run_log.html

copy OG_TEST_RESULTS\log.html OG_TEST_RESULTS\first_run_log.html /Y

:: we launch the tests that failed

echo #######################################

echo # Running again the tests that failed #

echo #######################################

::cmd /c pybot –outputdir OG_TEST_RESULTS –nostatusrc –rerunfailed OG_TEST_RESULTS/output.xml –output rerun.xml ReRunTest.robot

cmd /c pybot –nostatusrc –outputdir OG_TEST_RESULTS –rerunfailed OG_TEST_RESULTS/output.xml –output rerun.xml %*

::–output.xml rerun.xml %*

:: Robot Framework generates file rerun.xml

:: we keep a copy of the second log file

copy OG_TEST_RESULTS\log.html OG_TEST_RESULTS\second_run_log.html /Y

:: Merging output files

echo ########################

echo # Merging output files #

echo ########################

cmd /c rebot –nostatusrc –outputdir OG_TEST_RESULTS –output output.xml –merge OG_TEST_RESULTS/output.xml OG_TEST_RESULTS/rerun.xml

Hi,

I need a help to remove/disappear the Suite teardown from my report.html.

My execution is over and all cases are passed. But just because Suite teardown is failed the entire suite and test cases show as failed. So I want to regenerate a report which doesn’t contain the Suite teardown. Can somebody please help me.

@laurentbristiel:

Is there some way I can use this batch script to pass test results to testrail? I tried adding the cmd /c pybot –outputdir OG_TEST_RESULTS -v RNOAUTH_HOST:QA01 –listener TestRailListener:479 SMP-API-Talent_Pool.robot to the script but with re-runs I’m not sure how to add it currently I tried to add it where you have the cmd /c steps for this batch script but it will cause failures in Jenkins and does not pass results to TestRail. Please advise.

Is there any way to re-run only tests that fail with a specific error? For example, if an unstable environment cause intermittent failures, and you only want to retry tests that fail with 502, 503 or 504 response on an api call?

When you re-run the tests, you could do something like:

Where the tests that don’t fail with 50x are tagged with real_failures. This tag could be added in a Test Teardown, using “Set Tags” and checking what caused the failure. This way you would re-run only the failures due to 50x.

what if we are using pabot would this work in the same way?

Hi!

I am using modified script on jenkins and it works fine.

It reruns failed tests. In log and report I can see merged results. E.g. 13 tests passed, 1 failed, in rerun it passed too. So in log and report I see 14 passes.

But on results page on jenkins I still see 13/1. Build is marked as passed. But it still ruins my summary (graph and pass/fail), also in email $ROBOT_PASSED / $ROBOT_FAILED shows results of first run.

Is it there any way for jenkins to show results of test+retest, also I would like to send in email result of retested tests. (If is there any way to format it “passed, retested-passed, failed” it would be wonderful.)

Thank you for your answer.

m.R.

strange, on the result page in Jenkins you should have the test+retest final status.

Be aware that the way Jenkins is computing its result page is by using the output.xml retrieved from the workspace.

So re-check that the output.xml is correctly named/located on the workspace and that Jenkins plugin is configured to retrieve it.

Thank you for reply. You are probably right.

What I see in console output:

+ rebot -d Results –merge Project/Results/output.xml Project/Results/rerun.xml

Log: /var/lib/jenkins/workspace/Project/Results/log.html

Report: /var/lib/jenkins/workspace/Project/Results/report.html

I thought its odd that I dont see output.xml in report.

Hi, laurentbristiel

I copy your script into my Jenkins post build execute shell step, but failed test never run again, I note that there is a “$@” in the end of your pybot command, is it necessary?

yes, the $@ will send to Robot Framework the arguments that were passed on the command line when calling this script.

See: https://www.jvl-services.com/content/unix-shell/unix-parameters-0-1-2-3

Thanks, laurentbristiel

I put a bash shebang(#!/bin/bash) in the Jenkins execute shell step, then everything is ok.

https://unix.stackexchange.com/questions/271877/jenkins-if-statment

getting this error please help

TestLink Results

No TestLink results available yet!

Run a build with TestLink plugin enabled to see results here

Hi Laurant,

Could you explain this part

if [ $? -eq 0 ]; then

echo “we don’t run the tests again as everything was OK on first try”

exit 0

fi

Because now even if the test failed I’m still getting “everything was Ok….”

This part is checking if the Robot Process ended with a return code equal to zero.

(the variable $? contains the return code of the last command executed)

If this is the case, then there was no failed test.

If you do have failed tests but you get a return code of zero, this is strange…

Maybe check your script and see if there is not another command that is launched just before the test.

LAurent

Hi Lauren,

I am running robot files in batch file and using ‘rebot’ command to merged output files.

But if there is a failure in one of scripts, merged output file is not generated. Is there any command which generated cumulative reports inspite of errors also.

rebot –timestampoutputs –log All.html –logtitle All –output output_All.xml output_1*.xml output_2*.xml output_DCIO_Capabilities_FI_Page*.xml output_3*.xml

Or generate a cumulative reports from all the reports present in a folder.

Thanks

Naina

Hello,

I am not sure to understand what problem you are facing. It depends what kind of “error” you face and you would need to understand why the merged report is not generated (is there an error message?). But this would be more a shell-question than a robot-rebot question anyway. You need to look for help on the unix shell side.

Good luck with this.

Laurent

Hi Laurent!

Great content.. I tried this however I’m getting an error while trying to rerun the failed tests. Any idea on what this is?

robot –rerunfailed output.xml –output rerun.xml -i FAILED TestCases

[ ERROR ] Unexpected error: error: bad character range

Traceback (most recent call last):

File “c:\python27\lib\site-packages\robot\utils\application.py”, line 83, in _execute

rc = self.main(arguments, **options)

File “c:\python27\lib\site-packages\robot\run.py”, line 431, in main

suite.configure(**settings.suite_config)

File “c:\python27\lib\site-packages\robot\running\model.py”, line 141, in configure

model.TestSuite.configure(self, **options)

File “c:\python27\lib\site-packages\robot\model\testsuite.py”, line 160, in configure

self.visit(SuiteConfigurer(**options))

File “c:\python27\lib\site-packages\robot\model\testsuite.py”, line 168, in visit

visitor.visit_suite(self)

File “c:\python27\lib\site-packages\robot\model\configurer.py”, line 47, in visit_suite

self._filter(suite)

File “c:\python27\lib\site-packages\robot\model\configurer.py”, line 61, in _filter

self.include_tags, self.exclude_tags)

File “c:\python27\lib\site-packages\robot\model\testsuite.py”, line 145, in filter

included_tags, excluded_tags))

File “c:\python27\lib\site-packages\robot\model\filter.py”, line 41, in __init__

self.include_tests = include_tests

File “c:\python27\lib\site-packages\robot\utils\setter.py”, line 35, in __set__

setattr(instance, self.attr_name, self.method(instance, value))

File “c:\python27\lib\site-packages\robot\model\filter.py”, line 53, in include_tests

if not isinstance(tests, TestNamePatterns) else tests

File “c:\python27\lib\site-packages\robot\model\namepatterns.py”, line 23, in __init__

self._matcher = MultiMatcher(patterns, ignore=’_’)

File “c:\python27\lib\site-packages\robot\utils\match.py”, line 67, in __init__

for pattern in self._ensure_list(patterns)]

File “c:\python27\lib\site-packages\robot\utils\match.py”, line 42, in __init__

self._regexp = self._compile(self._normalize(pattern), regexp=regexp)

File “c:\python27\lib\site-packages\robot\utils\match.py”, line 50, in _compile

return re.compile(pattern, re.DOTALL)

File “c:\python27\lib\re.py”, line 194, in compile

return _compile(pattern, flags)

File “c:\python27\lib\re.py”, line 251, in _compile

raise error, v # invalid expression

i am using –rerunfailedsuites to rerun the suite again

however in one senario, the first test where i am adding something is successful, but something fails like when modifying an entry.

then when rerunning the suite now the adding failed as the entry is already existing and passed from the first run.

so is there a way to the report show the passed version only wether it passed in the first run or the second ?

you should re-run only the tests that failed, so then the merged report should show you the test that succeeded on first run as OK.

This is great! We will have a better reporting of tests which are flaky now. However, I have a question.

Is there a way to limit the number of retries?

yes, just decide how many retries you want to do in your script.

I usually do 2 or 3 retries max.

Hi, Guys,

Have you noticed that the final merged report has the wrong duration? It looks more like the total execution time, rather than the elapsed time. This is obviously wrong, especially if you are using pabot with several parallel processes.

Any idea?

Thanks for the feedback.

I did not notice.

Maybe you could ping other Robot Framework users on the Slack (https://robotframework-slack-invite.herokuapp.com/) to raise/discuss that point.

What if test case failed in second run, will it go for third run to retest?

If you want a third run, you have to update the script to have 3 runs, and same if you want a 4th run etc.

HI Laurent Bristiel, thanks for this detailed description of merging output. But this method is not working if there more than 1 suites running in the single run. Like in the below scenario.

Statistics By Suite Total Pass Fail Elapsed

Suites 328 236 92 04:13:55

Suites . Dashboard 40 40 0 00:26:13

Suites . Monitor 6 1 5 00:18:27

Suites . NV Agent 142 89 53 01:04:22

Suites . RUM KEYWORDS 128 99 29 02:15:55

In this scenario rerun failures and mergin output is throwing error

[ ERROR ] Reading XML source ‘/tcpath/results/2020-08-14-11:25:44/rerun.xml’ failed: ParseError: mismatched tag: line 522, column 2

Hi,

I am trying to locally re run the failed tests via the output file generated from a run.

We have multiple suites running sequentially.

The generated output.xml prefix the the test cases with suite names.

Is there a solution to get rid of that?